In this post, I will quickly show how to use the Office365-REST-Python-Client library to upload a large file to a SharePoint library.

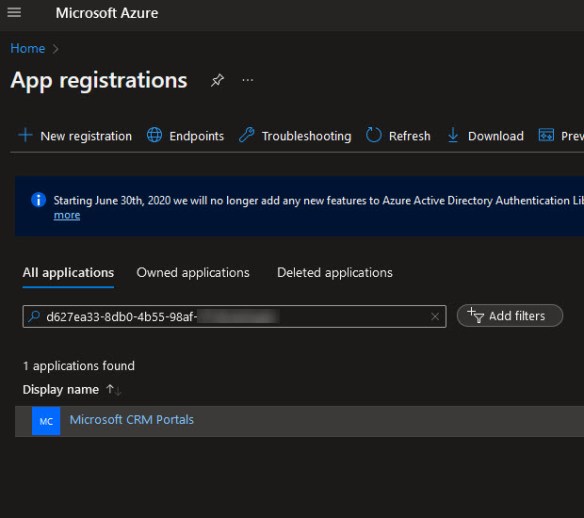

For this to work, you will need a certificate, Azure App registration, and access to the target SharePoint site. I outlined all the necessary parts in this post: Modernizing Authentication in SharePoint Online Note: the linked post will output a .PFX cert, and the script below will need a .PEM cert. You can use this Python command to convert the cert:

from cryptography.hazmat.primitives import serialization

from cryptography.hazmat.primitives.asymmetric import rsa

from cryptography.hazmat.backends import default_backend

from cryptography.hazmat.primitives import hashes

from cryptography import x509

from cryptography.hazmat.primitives.serialization import pkcs12

# Load the PFX file

pfx_file = open('C:\\path_to_cert\\EXAMPLE.pfx', 'rb').read() # replace with your pfx file path

(private_key, certificate, additional_certificates) = pkcs12.load_key_and_certificates(pfx_file, None, default_backend())

with open('NewCERT.pem', 'wb') as f:

f.write(private_key.private_bytes(

encoding=serialization.Encoding.PEM,

format=serialization.PrivateFormat.TraditionalOpenSSL,

encryption_algorithm=serialization.NoEncryption()

))

f.write(certificate.public_bytes(serialization.Encoding.PEM))

# install this library if needed

# pip install cryptography

Ok, with that out of the way, you can use this script to upload to a SharePoint library. In the script, I’ve commented out the line that would be used to upload to a folder within a library.

import os

from office365.sharepoint.client_context import ClientContext

cert_credentials = {

"tenant": "abcxyz-1234-4567-8910-0e3d638792fb",

"client_id": "abcddd-4444-4444-cccc-123456789111",

"thumbprint": "7D8D8DF7D8D2F4DF8DF45D4FD8FD48DF5D8D",

"cert_path": "RestClient\\NewCERT.pem"

}

ctx = ClientContext("https://tacoranch.sharepoint.com/sites/python").with_client_certificate(**cert_credentials)

current_web = ctx.web.get().execute_query()

print("{0}".format(current_web.url))

filename = "LargeExcel.xlsx"

folder_path = "C:\\code\py"

def print_upload_progress(offset):

# type: (int) -> None

file_size = os.path.getsize(local_path)

print(

"Uploaded '{0}' bytes from '{1}'...[{2}%]".format(

offset, file_size, round(offset / file_size * 100, 2)

)

)

#upload to a folder

#target_url = "Shared Documents/folderA/folderB"

target_url = "Shared Documents"

target_folder = ctx.web.get_folder_by_server_relative_url(target_url)

size_chunk = 1000000

local_path = os.path.join(folder_path, filename)

with open(local_path, "rb") as f:

uploaded_file = target_folder.files.create_upload_session(

f, size_chunk, print_upload_progress, filename

).execute_query()

print("File {0} has been uploaded successfully".format(uploaded_file.serverRelativeUrl))

If you receive an error stating you don’t have access, double-check that you’ve added the App Registration to the target SharePoint site permissions. Again, this is noted in the blog post linked at the being of this post.

Consider this a workaround until MS Graph is out of its latest beta and there’s more support for easily uploading to SharePoint.

What if you need to upload a file and set a column value? When working with SharePoint via the API, you must be mindful of the column names. The column name in the UI might not be the same as the internal name, so I will use the script above as my starting point and add the following script to the end. In this example, I’m setting two fields: ReportName and ReportDate.

#get the file that was just uploaded

file_item = uploaded_file.listItemAllFields

# Define a dictionary of field names and their new values

fields_to_update = {

"ReportName": "My TPS Report",

"ReportDate": datetime.datetime.now().isoformat(),

# Add more fields here as needed

}

# Iterate over the dictionary and update each field

for field_name, new_value in fields_to_update.items():

file_item.set_property(field_name, new_value)

# Commit the changes

file_item.update()

ctx.execute_query()

print("Report fields were updated")

How do you get a list of all the columns in a list or library? The script below will output all the column’s internal and display names.

from office365.sharepoint.client_context import ClientContext

cert_credentials = {

"tenant": "abcxyz-1234-4567-8910-0e3d638792fb",

"client_id": "abcddd-4444-4444-cccc-123456789111",

"thumbprint": "7D8D8DF7D8D2F4DF8DF45D4FD8FD48DF5D8D",

"cert_path": "RestClient\\NewCERT.pem"

}

ctx = ClientContext("https://tacoranch.sharepoint.com/sites/python").with_client_certificate(**cert_credentials)

current_web = ctx.web.get().execute_query()

print("{0}".format(current_web.url))

# Get the target list or library

list_or_library = ctx.web.lists.get_by_title('TPS-Reports')

# Load the fields

fields = list_or_library.fields.get().execute_query()

# Print the field names

for field in fields:

print("Field internal name: {0}, Field display name: {1}".format(field.internal_name, field.title))